Alibaba ha appena lanciato Qwen 2.5, anche nella sua versione Max. Parliamo di un modello di intelligenza artificiale che si mette in diretta competizione con le big tech occidentali che ben conosciamo – come Open.ai e Google – ma soprattutto con la sua connazionale DeepSeek, che ha offerto un modello open-source competitivo e straordinariamente più economico.

Ciò dapprima ha creato scompigli in borsa e nel mercato AI mondiale, poi ha attirato i fanali dei garanti della privacy europei e statunitensi. Fatto che ha condotto ad accuse, divieti e restrizioni. Al di là di luci, ombre e divieti, DeepSeek in pochissimi giorni ha lasciato un impatto indelebile sul modo di concepire e fruire dell’intelligenza artificiale.

Sul campo di questa guerra tecnologica spunta Alibaba – colosso cinese che neanche serve presentare – dichiarando in parole povere che la sua AI Qwen 2.5-Max mette in riga DeepSeek e che dà filo da torcere ai giganti occidentali.

Indice dei contenuti

Davvero Qwen 2.5-Max è così competitiva come dichiara Alibaba?

Innanzitutto Qwen 2.5-Max è addestrata su oltre 20 trilioni di token. Ciò significa che il modello è stato nutrito con un’enorme quantità di informazioni. Quindi Qwen 2.5-Max sulla carta ha ottima conoscenza, coerenza e capacità di ragionamento.

Tuttavia, più dati implicano anche maggiori costi computazionali e possibili bias, specie se il dataset non è ben curato. Quindi la qualità dei dati è più importante della quantità: fattore che già rischia di sgonfiare i muscoletti del papà di Qwen.

Ma Alibaba lo sa bene. Quindi la sua Qwen 2.5-Max utilizza un’architettura MoE, che attiva solo le parti del modello rilevanti per ciascun compito. Ciò implica efficienza computazionale, che comporta:

- meno consumo di risorse.

- minore consumo energetico,

- riduzione costi operativi,

- diminuzione impatto ambientale,

- maggiore velocità di calcolo,

- più economicità, perché il costo per token elaborato può essere inferiore rispetto ai modelli che utilizzano tutti i parametri in ogni richiesta.

Vantaggi che indubbiamente aiutano a compensare. In più il modello è stato ulteriormente perfezionato attraverso tecniche di SFT (affinamento supervisionato con dati etichettati da esperti per migliorare la qualità delle risposte) e RLHF (addestramento tramite feedback umano per rendere le risposte più naturali e allineate alle preferenze degli utenti). Interventi quindi che dovrebbero garantire risposte più accurate e allineate alle preferenze umane.

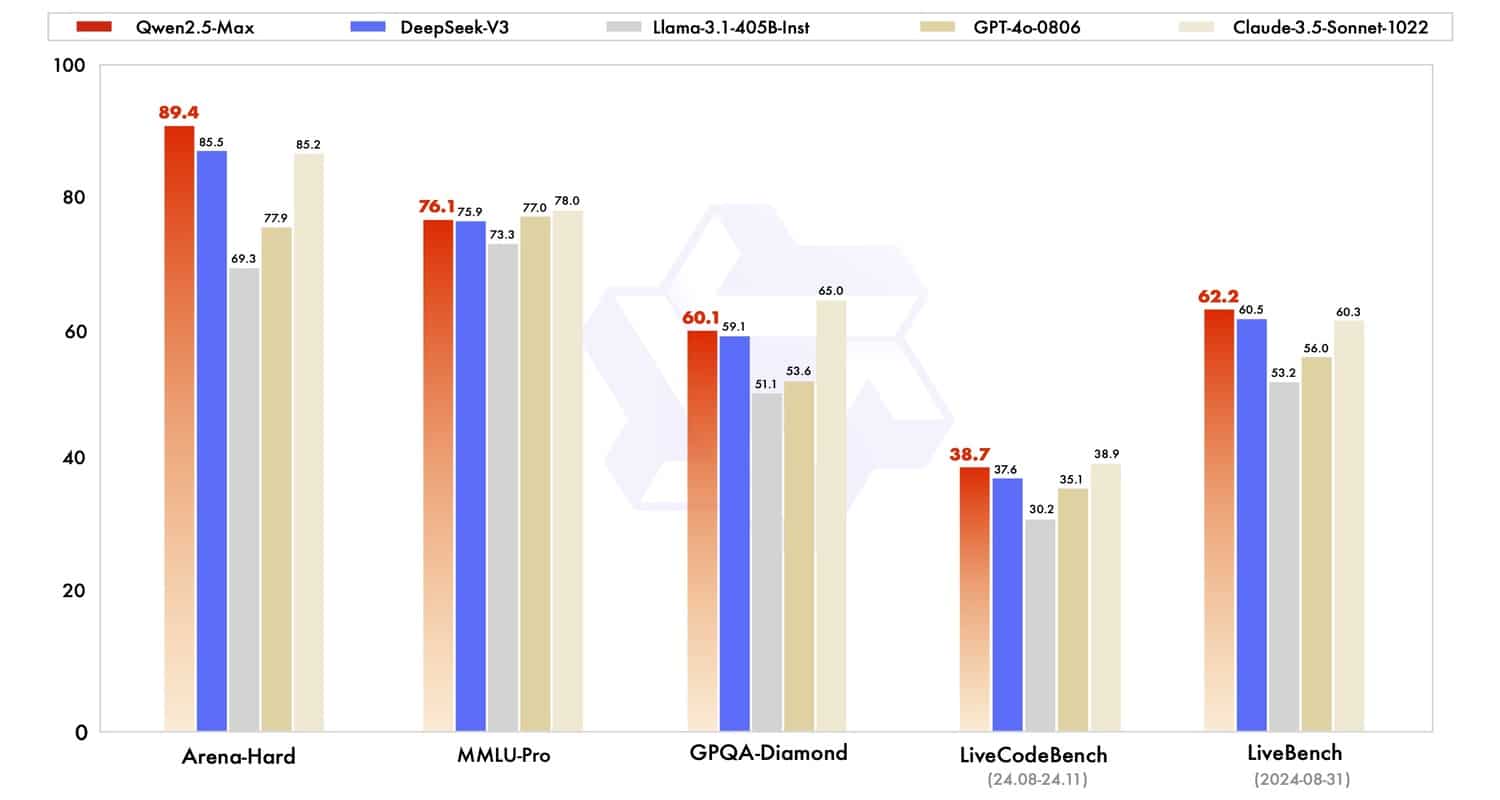

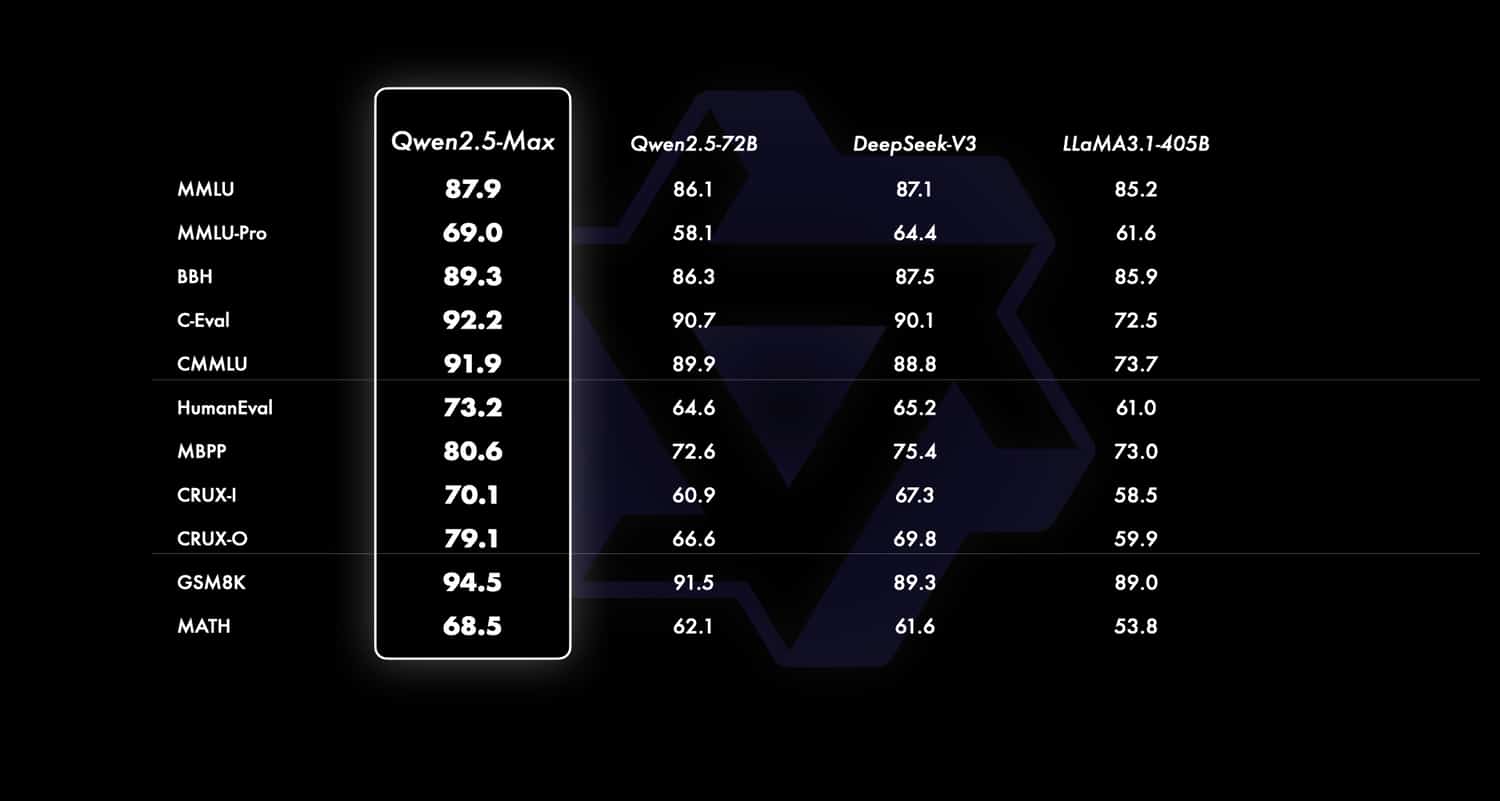

Su questo e sulla comprensione del linguaggio, l’AI Qwen 2.5-Max si è dimostrata superiore a DeepSeek in diversi benchmark e dà filo da torcere anche ad altri grandi attori occidentali.

Quindi sì: Qwen 2.5-Max è davvero competitiva come dice Alibaba… ma i benchmark e i token non sono tutto. La battaglia, tra possibilità di open-source, integrazioni di piattaforme ed efficienza computazionale, ormai non si affronta più puntando soltanto sulla pura performance.

Alibaba vs DeepSeek: la sfida in casa tra due filosofie opposte

DeepSeek come Qwen 2.5 si basa sull’architettura MoE vista prima, ma l’AI di Alibaba vince in numero di token e in vari benchmark. Questo suggerisce che Qwen sia più potente. Ma non dimentichiamoci che DeepSeek ha lanciato un’AI open-source, accessibile quindi a sviluppatori e aziende. Una strategia del genere ha avuto un’impatto enorme proprio perché potrebbe aumentare in maniera esponenziale i progressi e gli utilizzi dell’AI… ma se fosse troppo accessibile?

Non a caso DeepSeek è finita sotto il mirino di tutti, ma ugualmente rimane competitiva perché gioca su un campo che in pochi stanno calcando e che potrebbe riscuotere sempre più l’attenzione e l’apprezzamento delle persone. Alibaba ha preferito – sotto questo aspetto – rimanere in linea con le AI occidentali, mantenendo un certo controllo sulla propria tecnologia, sui suoi utilizzi e sulla sua distribuzione, sebbene Qwen-VL, Qwen-Audio e Qwen 1.x e 2.x – Lite e Standard – siano effettivamente disponibili in open-source.

Quindi solo le versioni meno performanti di Qwen sono open-source e loro licenze hanno restrizioni sull’uso commerciale, a differenza di DeepSeek. Questo denota l’opposizione di due filosofie: DeepSeek ha scelto una strategia più orientata alla comunità, puntando a democratizzare l’accesso ai modelli linguistici avanzati; Alibaba invece protegge il suo vantaggio competitivo e monetizza attraverso servizi cloud e API.

Qwen 2.5-Max può davvero sfidare i giganti dell’AI?

La risposta breve è sì, ma la questione è un pelino più complessa.

Qwen 2.5-Max ha ottime capacità che la mettono in competizione con molti altri modelli su scala mondiale, ma non è così soverchiante. Inoltre mancano alcuni dati di paragone oltre a quelli ufficiali pubblicati da Alibaba stessa.

Quindi la sua ascesa influenza indubbiamente il mercato delle AI: un campo di battaglia difficile – se non impossibile – da dominare, che abbiamo visto essere caratterizzato da altri fattori oltre alla sola potenza. Alibaba quindi offre un’alternativa valida e potenzialmente più accessibile rispetto ai modelli di Open.ai e di Google per esempio, anche se resta meno accessibile della sua connazionale DeepSeek.

Poi c’è da considerare che Qwen 2.5-Max è più economica rispetto ad alcuni modelli. Ti basti sapere che Qwen 2.5-Max ha un costo di 1,6 dollari per milione di token in input e 6,4 dollari per milione di token in output, contro 5 dollari e 15 dollari a milione di Gpt-4o. Sotto questo aspetto dunque Qwen 2.5-Max è 2-3 volte più economica.

Infine Alibaba per competere con la sua AI punta su un’integrazione più forte con il proprio ecosistema cloud ed e-commerce, favorendo il mercato asiatico.

Quindi la sua reale capacità di imporsi come leader globale dipenderà dalla qualità delle sue applicazioni reali e dalla sua capacità di scalare al di fuori della Cina.

Vuoi provare Qwen 2.5-Max? Ecco come fare

Per provare Qwen 2.5-Max hai due opzioni principali:

Qwen Chat: accedi direttamente al modello tramite l’interfaccia web di Qwen Chat. Visita chat.qwenlm.ai, seleziona “Qwen2.5-Max” dal menu a tendina dei modelli e inizia a interagire con l’IA in tempo reale. Puoi gratuitamente fare ricerche sul web, porre domande, tradurre, generare immagini, video… e volendo puoi accedere direttamente col tuo account Google.

API di Alibaba Cloud: Per un’integrazione più avanzata, puoi utilizzare l’API di Qwen 2.5-Max tramite Alibaba Cloud. Crea un account su Alibaba Cloud, attiva il servizio Model Studio e genera una chiave API. L’API è compatibile con il formato di OpenAI, facilitando l’implementazione per gli sviluppatori. Per dettagli sull’implementazione, consulta la documentazione ufficiale.

Io che ti sto scrivendo, ovviamente mi sono un po’ divertito con la chat…